Published on 2023-12-20 14:25 by Pouya Pournasir

Introduction

In robot teleoperation, operators and robots collaborate to achieve goals through shared autonomy, reducing operator workload. Trusting the robot is critical for the operator’s success, as low trust results in operators not delegating tasks to the robot, even if doing the task themselves creates a high workload that reduces their performance. Research shows trust repair is challenging, but social HRI suggests that robots can rebuild trust in social situations through social strategies such as acknowledging mistakes and promising to do better. This study explores integrating these social strategies and interfaces into teleoperation to enhance trust repair. We compared a social cue-based interface to a conventional one, theorizing that adding in social cues would increase the effectiveness of social trust-repair strategies. Our study found that participants view the social cue-based interface as more capable, a factor that can make the participant put more trust on the system.

Design Criteria

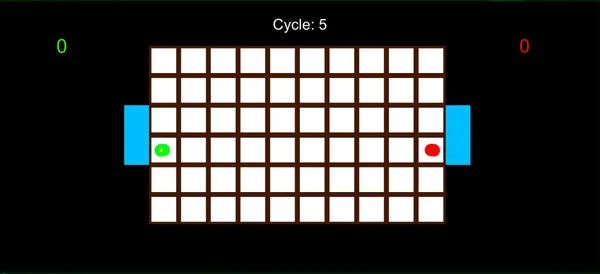

We designed a scenario where the operator has to control a shared autonomy robot and handle two tasks at the same time. In some cases, one task can be delegated to the robot if the operator trusts the robot’s autonomous capabilities.

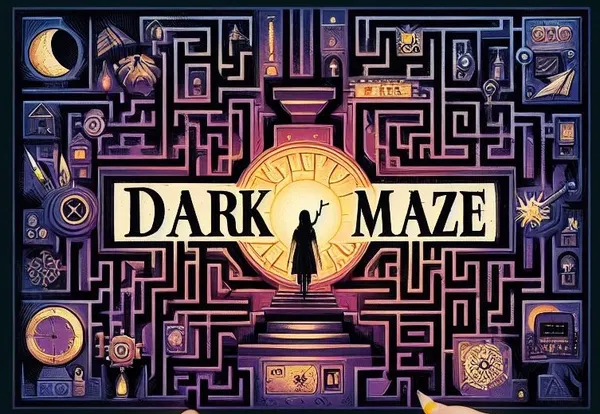

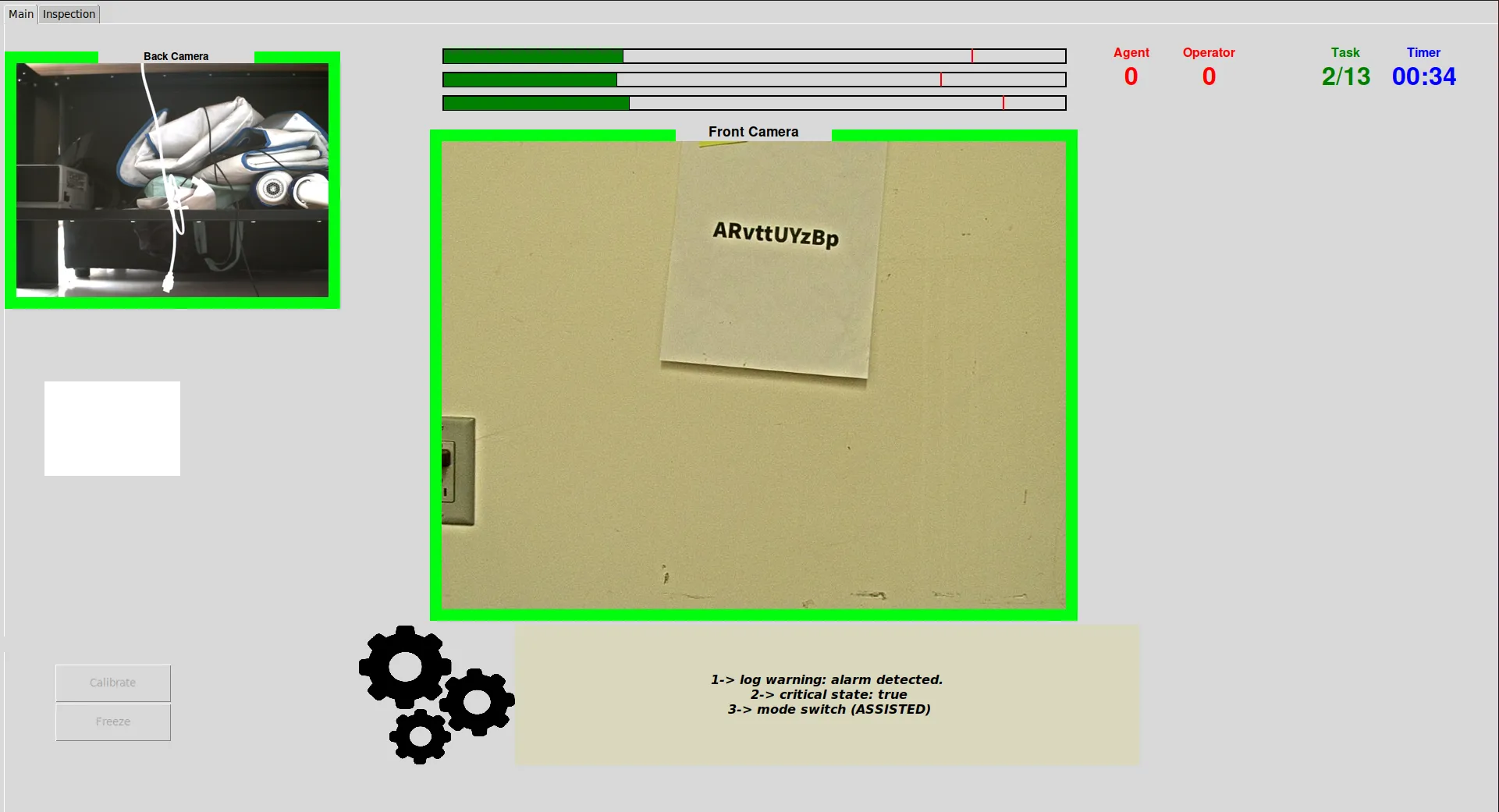

We had to design a video-game like mission for the operator to reach our experiment goals. We inspired from video games like Uncharted or Call of Duty to create a rigid, non-breakable narrative while the non-controllable variables in a robotic mission is much more. We had to engineer each part of the journey so that we are sure about we are analyzing the right data. We also had to engineer the number of mistakes the operator and the robot did in the experiment, at the right place and the right time. The main challenge was to priming them to believe the mistakes we made by themselves, not the creator of the system!

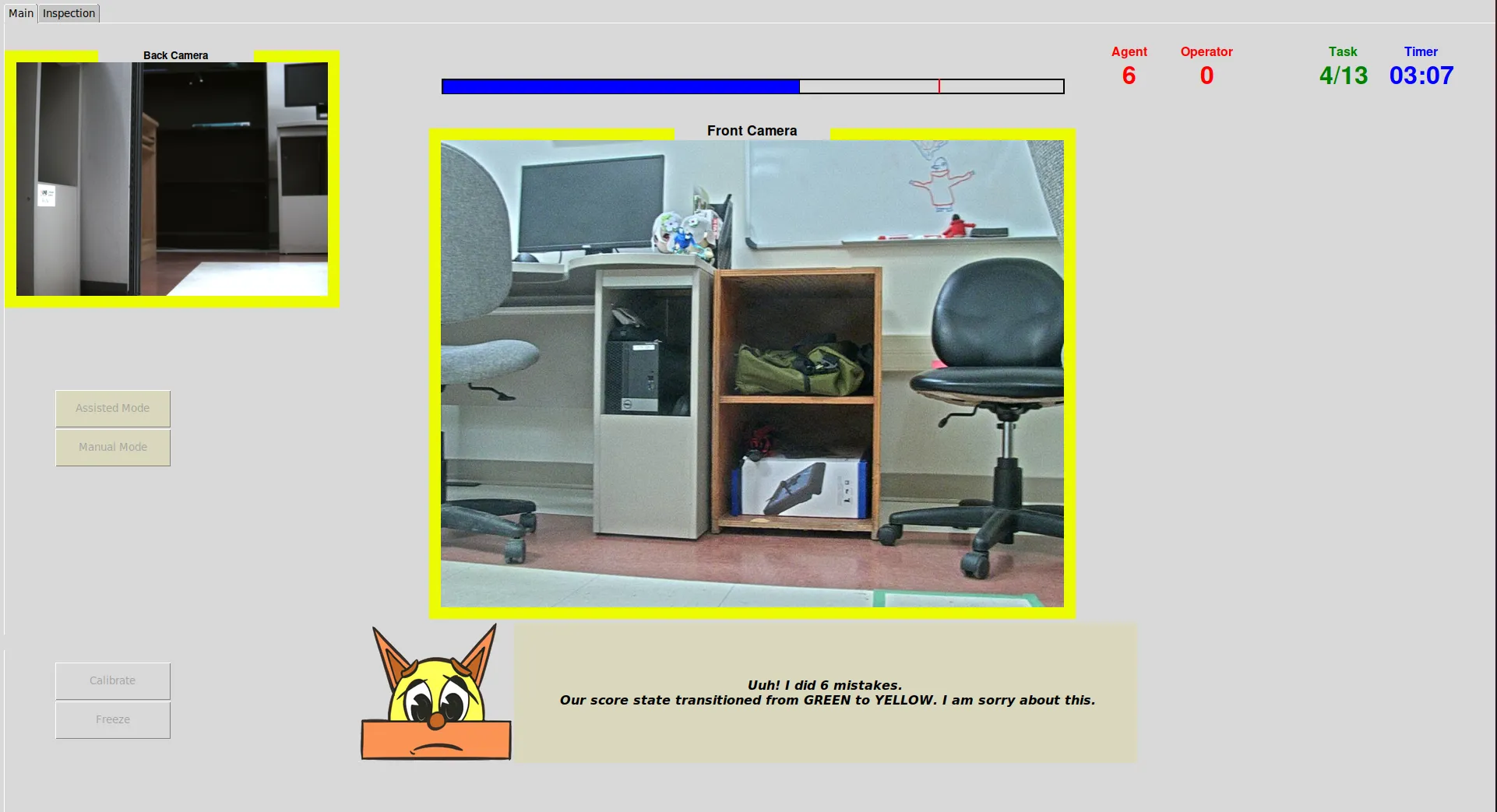

Two conditions of the experiment: social and non-social. which one increase trust?

Two conditions of the experiment: social and non-social. which one increase trust?

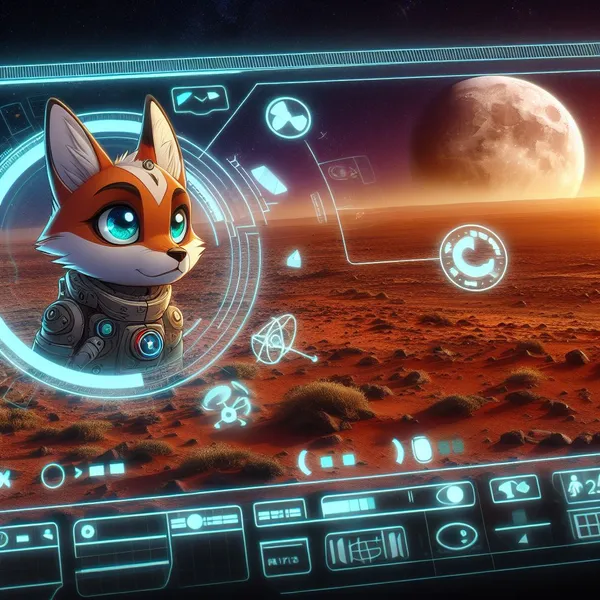

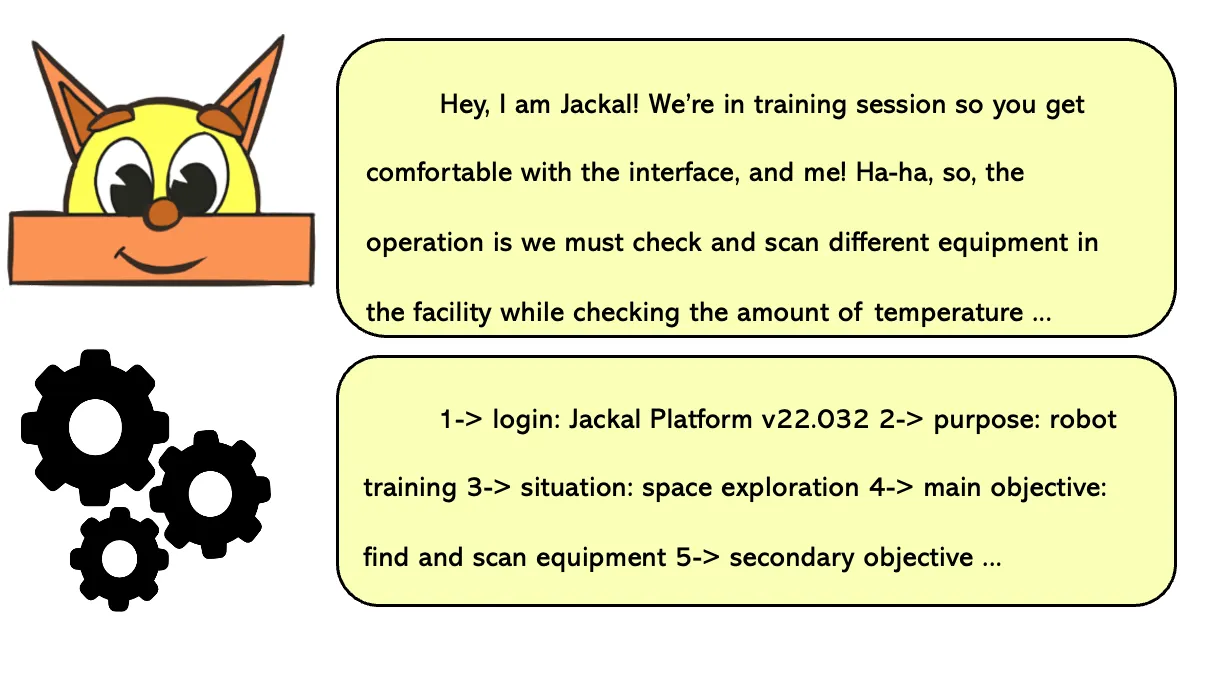

To create our social interface, we created a social agent using human-like language and simple avatar with animations in comparison to a conventional machine-like terminal. Both represents the AI (or Agent) that is embedded into the robot and communicates with the user who controls the robot. We had to create a narrative where:

- Trust forms between user and agent (trust formation).

- the agent breaks trust by performing badly (trust violation).

- the agent asks the user about delegating tasks to itself in the future.

The interface was inspired from video games such as Star Fox 64. I used State Pattern to control each part of the experiment.

Setup

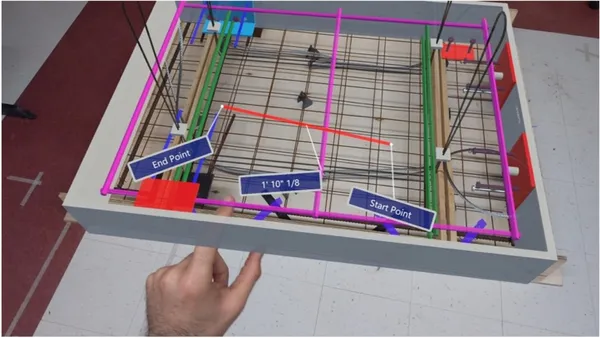

To create a teleoperation system, I used Robot Operating System (ROS) to program a robot called Jackal. We connected our robot to a PC and dualshock4 controller, each of them considered a node,using message-passing system of ROS written with python language. The controller passes commands to robot, and the robot sends camera data to PC.

Dialogue System (Model-View-Controller)

Just like conventional dialogue-driven video games that shows a dialogue box and an avatar, I implemented a dialogue system called Avalogue that follows the Model-Control-View (MVC) pattern.The model is basically two spreadsheets (instead of database) that contains information related to each dialogue object, and each sprite of animations such as talking, sad or happy face, etc. The dialogue is shown word by word, accompanied by a brief sound to mimic old dialogue driven games (e.g., Star Fox 64). Unlike video games, since our system was real-time, the agent had to react to different events while talking, so my main challenge was to pause the middle of the agent’s dialogue, enable the agent to react to the event, and then continue the conversation; something that is not common in other conventional dialogue systems. To fix this, I created a Control class called Avalogue, a composition of dialogue and avatar class I implemented before, with a deque that contains each Avalogue object. By using Update loop pattern in the Avalogue class (or Control), new events, which creates new Avalogues, will be at the top of the stack, and then data will be sent to dialogue view and avatar view.I tried to use semaphores to control the flow of dialogues (since each dialogue could be considered a thread) before switching to this solution.

Experiment Design

Technical Aspects

For other features, I used other behavioral patterns such as Singleton and Observer. Using baseCanvas as a parent class for numerous UI Elements helped me to re-position or re-size each element in general. I wrote a Shell script which automated the start of each experiment (giving arguments, opening different scripts, etc.). I also had to do socket programming to send messages from my personal laptop to PC, to control parts of the experiment. Version Controlling using git saved my life, as I added new features (each in its own branch) which broke the system, so I had to revert to main branch.

Lessons Learned

Making this system single-handedly was a difficult but rewarding experience. Writing 5,000 lines of code with numerous components, while acting simultaneously, made me learn a lot of Software Engineering concepts. If I had to redo it again, I would not use State Pattern and would make the system stateless (e.g., using Update loop instead) since for debugging each state, I had to go through each state one by one to reach the one I wanted. I wish I refactored my code more (something like a refactor day) and made it easier to debug; at the end of the project the debugging process was frustrating. Each new feature broke another thing, where I realized the mental toll of technical debt. But overall, I am proud of this project.

Written by Pouya Pournasir

← Back to blog